FFAI Bot Bowl II: Initial Thoughts

Table of Contents

This week I came across a new RL competition that piqued my interest; Bot Bowl II. This is based on the tabletop game Blood bowl (BB) created by Games Workshop, originally invented as a parody of American football. As I want to take part, I thought I’d write down some of my initial thoughts about the competition here.

Challenges addressed in the comp #

From the FFAI site, two key challenges will be encountered in this contest:

1. High turn-wise branching factor #

“However, as all pieces on the board belonging to a player can be moved several times each turn, the turn-wise branching factor becomes overwhelming for traditional algorithms”.

In the paper associated with the competition, the branching factor (BF) is measured both analytically and empirically [1]. The experiments were:

Two agents taking uniformly sampled actions- 100 games

- Average 169.8 actions in total

- Average 10.6 actions per-turn

- Step-wise BF = 29.8

- Turn-wise BF = 1030

GrodBot (scripted agent)- 10 games. GrodBot runs a lot slower than a random sampler!

- 41.6 actions per-turn

- Average step-wise BF = 17.2

- Turn-wise BF = 1742

Analytically, it was estimated that the lower bound on the average turn-wise BF is 1050. For reference, Chess has a turn-wise BF of around 35 actions, and Go around 250 [2]. The BF has quite a large impact on the agent’s ability to reason within a game. If you have simple actions to consider on your turn e.g move up or move down, it is far easier to modify your behaviours to reach your short/long term goals. This is one of the reasons why you see more agents for game like Sonic compared to World of WarCraft. This will in my eyes be one of the most difficult challenges when training/scripting a bot for this contest.

The impact of BF is a new concept for me to consider, so I want to do a follow up post researching it more.

2. Rare reward function #

“Additionally, scoring points in the game is rare and difficult, which makes it hard to design heuristics for search algorithms or apply reinforcement learning”.

Now the second point is very important. If the reward occurs rarely, the agent will learn slowly (if at all). But if the reward is shaped too much, it will no longer reflect the distribution and might be biased towards exploitation-based behaviours (greedy) rather than exploration [3].

To consider this with more context, the goal of BB is to score as many touchdowns as possible within the game by getting the ball into the opponents ‘end-zone’. This is done by either carrying the ball into the end-zone or catching the ball whilst standing in it. A game has 16 turns; 8 turns per-coach per-half. Because of this, it is not uncommon to end a game of BB with a score of 1-0, 0-0 etc with a human-human game lasting 45-150 minutes. This means that the agent should be considering long time horizons and continuously explore new strategies that may materialise over a few turns.

Another way to tackle this aside from standard approaches e.g temporal discounting is described in this OpenAI blog post about competitive self play. Agents will “initially receive dense rewards for behaviours that aid exploration like standing and moving forward, which are eventually annealed to zero in favor of being rewarded for just winning and losing”. I like this approach as there is the opportunity to ‘scaffold’ early learning (much like in early language acquisition [4]) more before throwing the agent into the proverbial deep-end. In the FFAI contest, there is additional cumulative information returned from a game. This allows for reward shaping aswell as the default win/loss/draw scores. Below are some of the additional rewards returned.

{

'cas_inflicted': {int},

'opp_cas_inflicted': {int},

'touchdowns': {int},

'opp_touchdowns': {int},

'half': {int},

'round': {int},

'ball_progression': {int}

}

Observation representation #

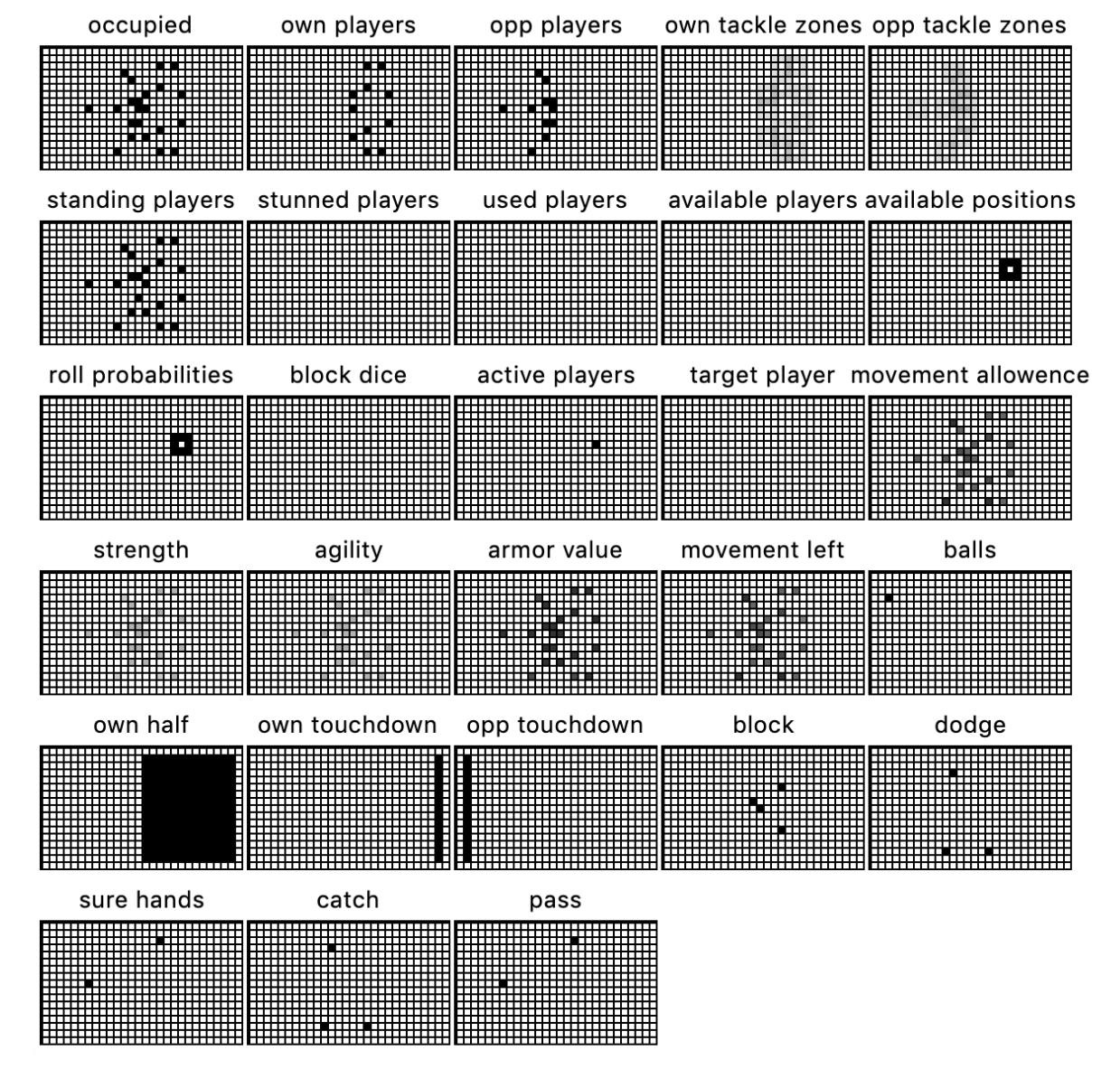

One of the coolest things to me is the observation space for the competition. In terms of observations-board, each tile on the board is represented by a combination of spatially-relevant ‘feature layers’. You can see a rendering of these below.

Figure used from Justesen et al (2019) describing the 28 spatial feature layers in the FFAI Gym observation [

1]. Each square represents the value of the tile for that layer. The number of squares changes with the board size. Here a value of 1 gives a black square and 0 a white square. Aside from this, I greatly enjoy the 8-bit aesthetic.

The observation-state space of the board covers the ‘non-spatial game-state’ conditions such as weather, opponent cheerleaders available etc. There are 5 Gym environments available with increasing difficulty. This seems like a good application for creating a curriculum based on board sizes. There are also observation-procedures describing which procedure the game is in, and observation-available-action-types showing which actions are available. After looking at the 31 action types possible to take, I’m definitely appreciating the complexity of the task ahead.

Approaches #

Self play #

Self play is the concept of getting the agent to play against itself to learn the intended behaviour. This can be the most recent copy of themselves or a selected copy by some seeding method. Self-play has enjoyed a lot of success in competitive game environments, with notable examples being TD-gammon, Alpha Go and Dota 2 [5]. It seems like the logical first step for me to implement this.

Imitation Learning #

I was about to start speculating on strategies for IL to encourage high-level tactic formation but then I realised that I’m pretty bad at BB. Let’s just say that agents won’t be solving the sample-efficiency problem by watching my games anytime soon. It is still an interesting idea to try, so I may see if I can copy some “accepted” meta starting moves as a warm-start, or try and find an online database of useful tactics that I can script.

Looking ahead: Learning the ‘meta’ #

As with any competitive game, there is a meta-game. This covers match-ups, unit rankings, tactics etc. For example here are a collection of character tier lists for Super Smash Bros Melee (2001). As you can tell the SSBM bot Phillip clearly read up on the meta by playing Captain Falcon. A meta mainly changes with a new patch/exploit. Otherwise it is typically stable and forged in the professional ‘competitive scene’, and then trickles down to casual play. For example, in BB the Undead team has the lowest loss rate of any of the factions with 31.9% in comparison to the Ogres at 58.6% [1].

In this iteration of the FFAI contest, only human v.s human is implemented. But the infrastructure is there for all of the factions such as Skaven (my favourites) and Orcs. Aswell as playing the game itself, faction-based match ups and strategies are integral to success. This will be an important consideration for next year. Similarly, learning adaptive tactics that generalise well against different opponents is important.

Conclusion #

I’m greatly looking forwards to this contest. I think there will be lots of challenges (mainly with the complexity), so seeing how other contestants cope with this will be interesting. So far in my RL research journey I have mainly focused on tasks relating to vision rather than game-based decision making. Because of this, I’m excited to ‘branch’ (hah) out my interests more.

References #

Justesen, N., Uth, L. M., Jakobsen, C., Moore, P. D., Togelius, J., & Risi, S. (2019, August). Blood bowl: A new board game challenge and competition for AI. In 2019 IEEE Conference on Games (CoG) (pp. 1-8). IEEE.

Berner, C., Brockman, G., Chan, B., Cheung, V., Dębiak, P., Dennison, C., & Józefowicz, R. (2019). Dota 2 with Large Scale Deep Reinforcement Learning. arXiv preprint arXiv:1912.06680.

Parisi, S., Tateo, D., Hensel, M., D’Eramo, C., Peters, J., & Pajarinen, J. (2020). Long-Term Visitation Value for Deep Exploration in Sparse Reward Reinforcement Learning. arXiv preprint arXiv:2001.00119.

Dieterich, S. E., Assel, M. A., Swank, P., Smith, K. E., & Landry, S. H. (2006). The impact of early maternal verbal scaffolding and child language abilities on later decoding and reading comprehension skills. Journal of School Psychology, 43(6), 481-494.

Bansal, T., Pachocki, J., Sidor, S., Sutskever, I., & Mordatch, I. (2017). Emergent complexity via multi-agent competition. arXiv preprint arXiv:1710.03748.